— What's in a name? When it comes to partially automated driving systems, apparently a lot.

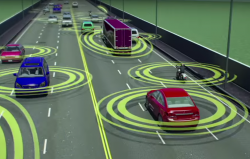

The latest research from AAA indicates 40 percent of U.S. consumers believe partially automated driving systems can do all the driving, a scary proposition for all drivers on the roads.

Names such as Autopilot, Pilot Assist and ProPILOT allegedly confuse some drivers who don't pay attention to their surroundings because they believe the cars handle all the driving chores.

In addition to the confusing names used by some automakers, researchers at AAA also tested multiple systems in four vehicles and determined they suffered from serious problems when dealing with stationary vehicles, poor lane markings and unusual traffic patterns.

To research partially automated vehicle capabilities, AAA conducted tests on the closed surface streets of the Auto Club Speedway in Fontana, California. Additional tests were conducted on highways and limited-access freeways in the Los Angeles area.

Researchers used a 2018 Mercedes-Benz S-Class, 2018 Nissan Rogue, 2017 Tesla Model S and 2019 Volvo XC40, all equipped with standard partially automated driving features.

While driving on public roadways, the test vehicles had problems in moderate traffic, on curved roadways and when traveling streets with busy intersections.

"Researchers noted many instances where the test vehicle experienced issues like lane departures, hugging lane markers, 'ping-ponging' within the lane, inadequate braking, unexpected speed changes and inappropriate following distances" - AAA

According to researchers, the systems typically did best on open freeways and freeways with stop and go traffic. However, nearly 90 percent of events requiring driver intervention were caused by the inability of the test vehicle to maintain lane positions.

Closed-track tests were conducted by using multiple driving conditions such as following an impaired driver, coming upon a tow truck or a vehicle that suddenly changed lanes to reveal a stopped vehicle was in the road.

AAA says all the vehicles successfully maintained lane position and recognized and reacted to the presence of the tow truck with little to no difficulty.

However, three of the four test vehicles required the drivers to intervene and take control to avoid an imminent crash when a lead vehicle changed lanes to reveal a stationary vehicle.

Real-world examples exist as drivers claim they crashed due to believing their cars could take full control in all driving situations, even when the owner's manuals clearly say otherwise.

Within the past few months, Tesla has been sued twice by drivers who crashed while not paying attention to the roads. In one crash, a Tesla Model S crashed into a stalled vehicle at 80 mph because the driver believed the car would stop on its own.

A separate lawsuit alleges the driver was deceived by Tesla's marketing of its Autopilot system, causing the driver to remove her hands from the wheel for 80 seconds just prior to the crash.

AAA's research isn't the first to show how drivers can be confused by the different names used for similar features offered by various automakers.

In a paper released by the Thatcham Research Center and the Association of British Insurers, researchers found consumers can easily fail to understand the differences between advanced driver assistance systems and fully automated driving technology. The confusion can cause drivers to ignore the reality of staying fully alert to deal with all driving conditions.

In addition, researchers say confused drivers can also lack the ability to understand they may be completely legally responsible for any crashes that occur.